Web Space Telescope introduces multi-instrument alignment

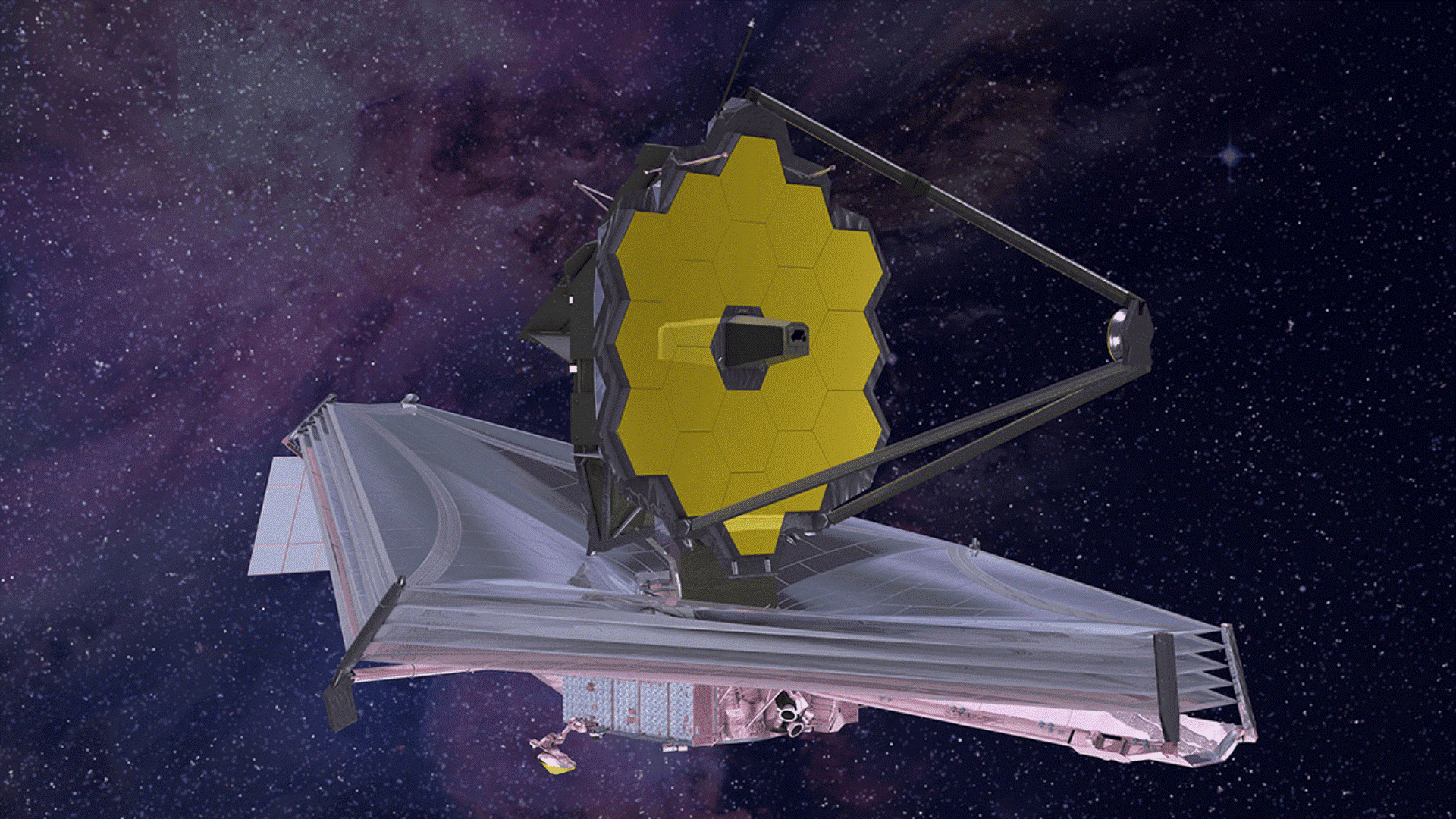

After reaching the milestone of aligning the telescope with NIRCam, the Webb team is beginning to expand the telescope’s alignment guide (The Fine Guidance Sensor, or FGS) to three other science instruments. This six-week process is called multi-instrument multi-field (MIMF) alignment.

When ground-based binoculars are converted into cameras, the device is sometimes removed from the binoculars and a new one is installed during the day when the binoculars are not in use. If the other instrument is already on the telescope, then part of the telescope’s optics (known as the pick-off mirror) is a mechanism for moving the field of view.

On a web-like space telescope, all the cameras look at the sky at the same time; To switch the target from one camera to another, we re-point the telescope to place the target in the visual field of the other device.

After MIMF, the web telescope will provide better focus and sharper images across all devices. In addition, you need to know the relative position of all visual fields accurately. Last weekend, we mapped the position of three near-infrared devices relative to the guide and updated their position in the software we used to direct the telescope. In the second instrument milestone, FGS recently achieved the “best guide” mode for the first time by locking the guide wire using its highest precision level. We also take “dark” images to measure the baseline detector response when light is not reaching – an important part of instrument calibration.

The web’s mid-infrared instrument, the MIRI, will be the last aligned instrument, as it is still waiting for the cryogenic cooler to cool to its final operating temperature, just below 7 degrees below zero. Combined with the initial MIMF observations, the cooler will be turned on in two stages to bring the MIRI to its operating temperature. The final stages of MIMF will align the telescope for MIRI.

You may be wondering: if all devices can see the sky at the same time, can we use it at the same time? The answer is yes! With parallel science exposure, when we direct one device toward a target, we can read another device at the same time. Parallel observations do not see the same point in the sky, so they provide what is basically a random pattern of the universe. With many parallel data, scientists can determine the statistical properties of discovered galaxies. In addition, for programs that want to map a large area, most of the parallel images will overlap, increasing the efficiency of valuable web datasets.